Quantifying How Hateful Communities Radicalize Online Users

Matheus Schmitz, Keith Burghardt, Goran Muric

Social media offers ways for stifled voices to be heard, yet it also allows hate speech from fringe communities to spread to mainstream channels. This paper measures the impact of joining fringe hateful communities by leveraging causal modeling methods on Reddit data. We measure members' usage of hate speech within and outside the four hateful communities before and after they become active participants, and find an increase in hate speech both within and outside the originating community that persists for months, implying that joining such community leads to a spread of hate speech throughout the platform. Our results provide new evidence of the harmful effects of echo chambers and the potential benefit of moderating them to reduce adoption of hateful speech.Cite as: Schmitz, M., Burghardt, K., & Muric, G. (2022). Quantifying How Hateful Communities Radicalize Online Users. In: 2022 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), pp.139-146. IEEE.

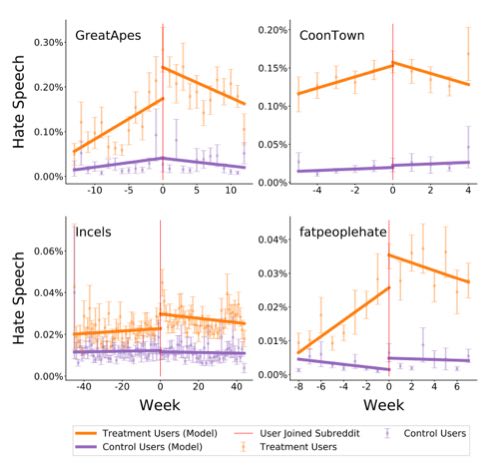

Hate speech frequency outside the hateful subreddit before and after joining. Across all subreddits studied, joining a hateful subreddit increased outward-oriented hate speech compared to control users who never joined.

Hate speech frequency outside the hateful subreddit before and after joining. Across all subreddits studied, joining a hateful subreddit increased outward-oriented hate speech compared to control users who never joined.

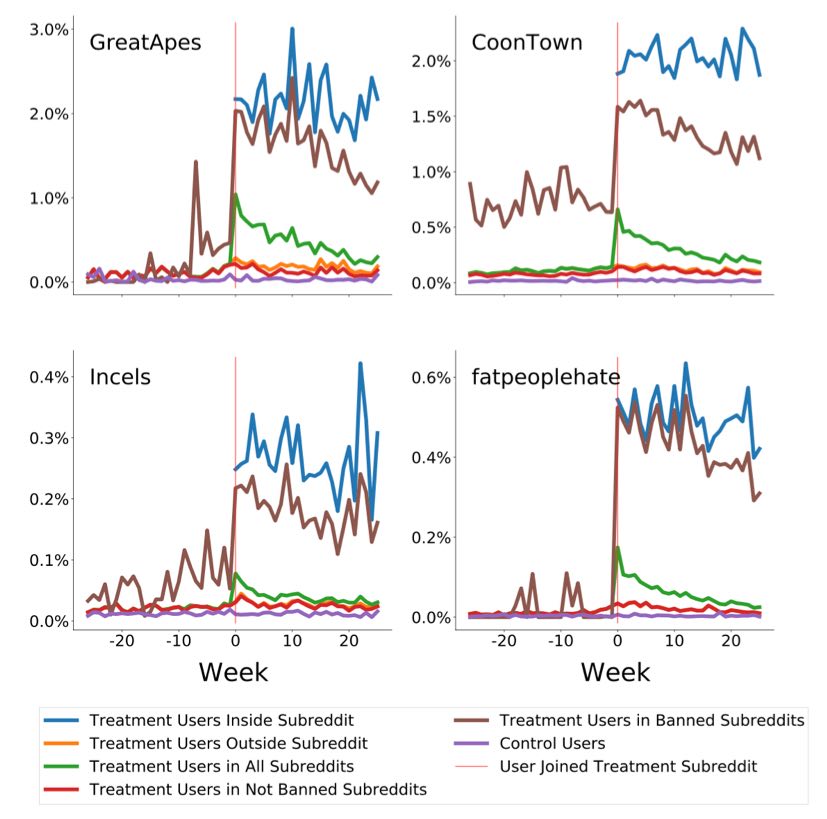

Hate speech frequency inside and outside hateful subreddits before and after joining. We see a dramatic increase in hate speech, especially within subreddits that were later banned. The increase in hate speech persists for several months. After 6 months, the hate speech has yet to fall back to pre-joining levels.

Hate speech frequency inside and outside hateful subreddits before and after joining. We see a dramatic increase in hate speech, especially within subreddits that were later banned. The increase in hate speech persists for several months. After 6 months, the hate speech has yet to fall back to pre-joining levels.

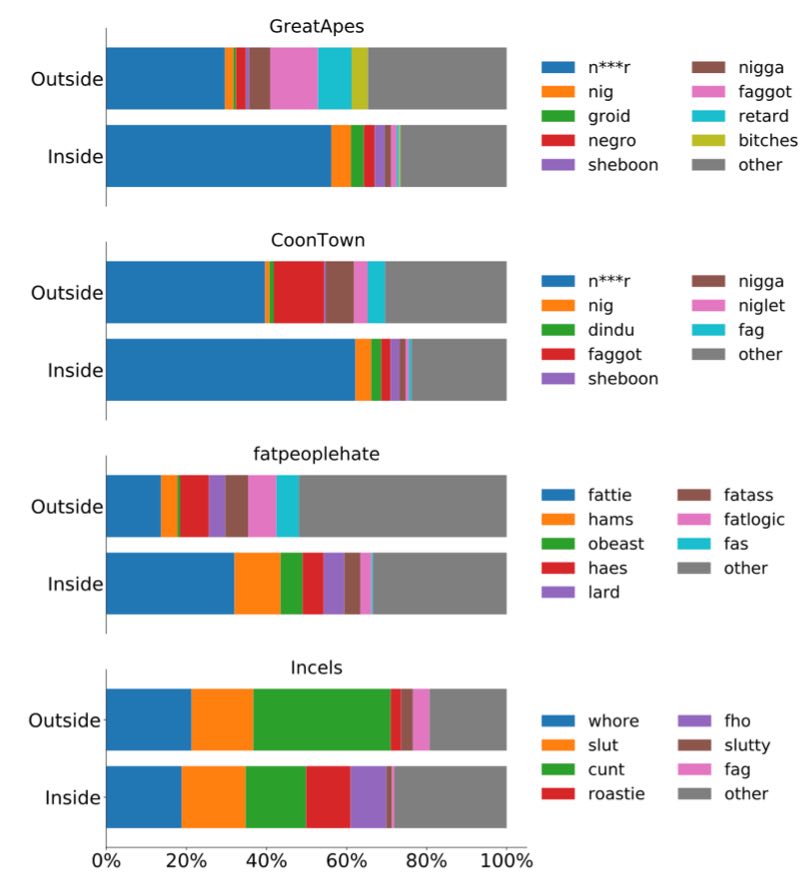

Distributions of the most frequent hate words used inside and outside of each observed subreddit. When hate speech is used outside the hate subreddit, in-group language (e.g., ``fho'' or ``hams'') is noticably missing.

Distributions of the most frequent hate words used inside and outside of each observed subreddit. When hate speech is used outside the hate subreddit, in-group language (e.g., ``fho'' or ``hams'') is noticably missing.

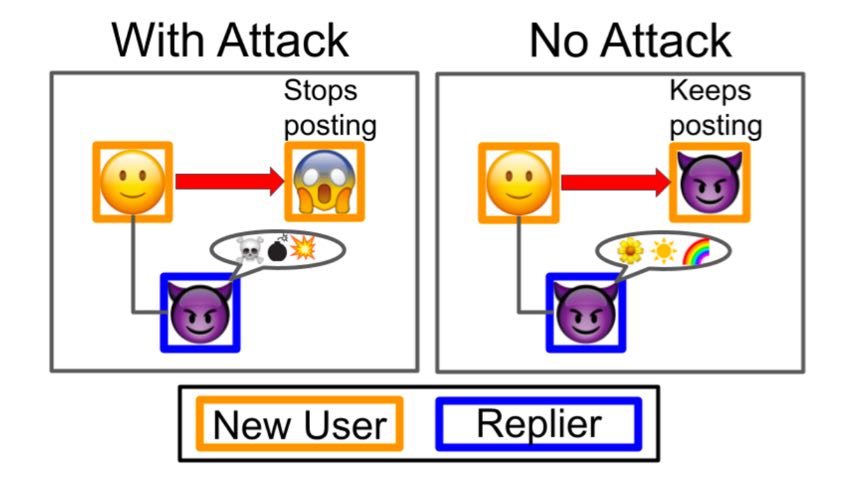

Schematic of hateful subreddit growth simulation. A mixed effect logistic regression model predicts whether a user continues commenting or leaves the subreddit. Predictions are counted to calculate the cumulative number of engaged users in a subreddit.

Schematic of hateful subreddit growth simulation. A mixed effect logistic regression model predicts whether a user continues commenting or leaves the subreddit. Predictions are counted to calculate the cumulative number of engaged users in a subreddit.

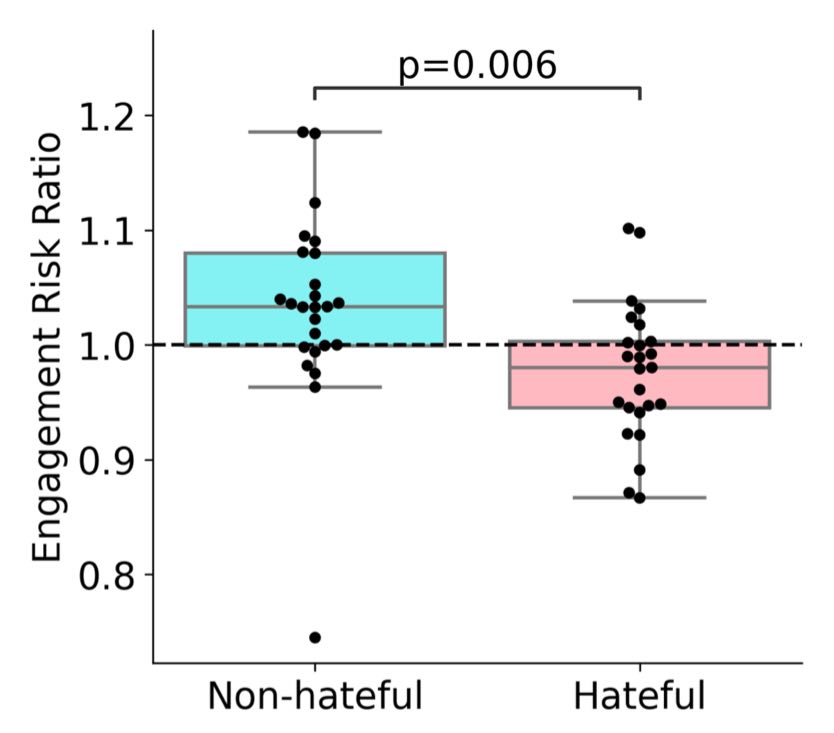

Replies in hateful subreddits lead to significantly less engagement than replies in non-hateful subreddits. Y-axis is the ratio in the probability of a user continuing to post with a reply divided by the probability without a reply. Values above one indicate replies correlate with greater engagement, while values less than one indicate less engagement after a reply.

Replies in hateful subreddits lead to significantly less engagement than replies in non-hateful subreddits. Y-axis is the ratio in the probability of a user continuing to post with a reply divided by the probability without a reply. Values above one indicate replies correlate with greater engagement, while values less than one indicate less engagement after a reply.

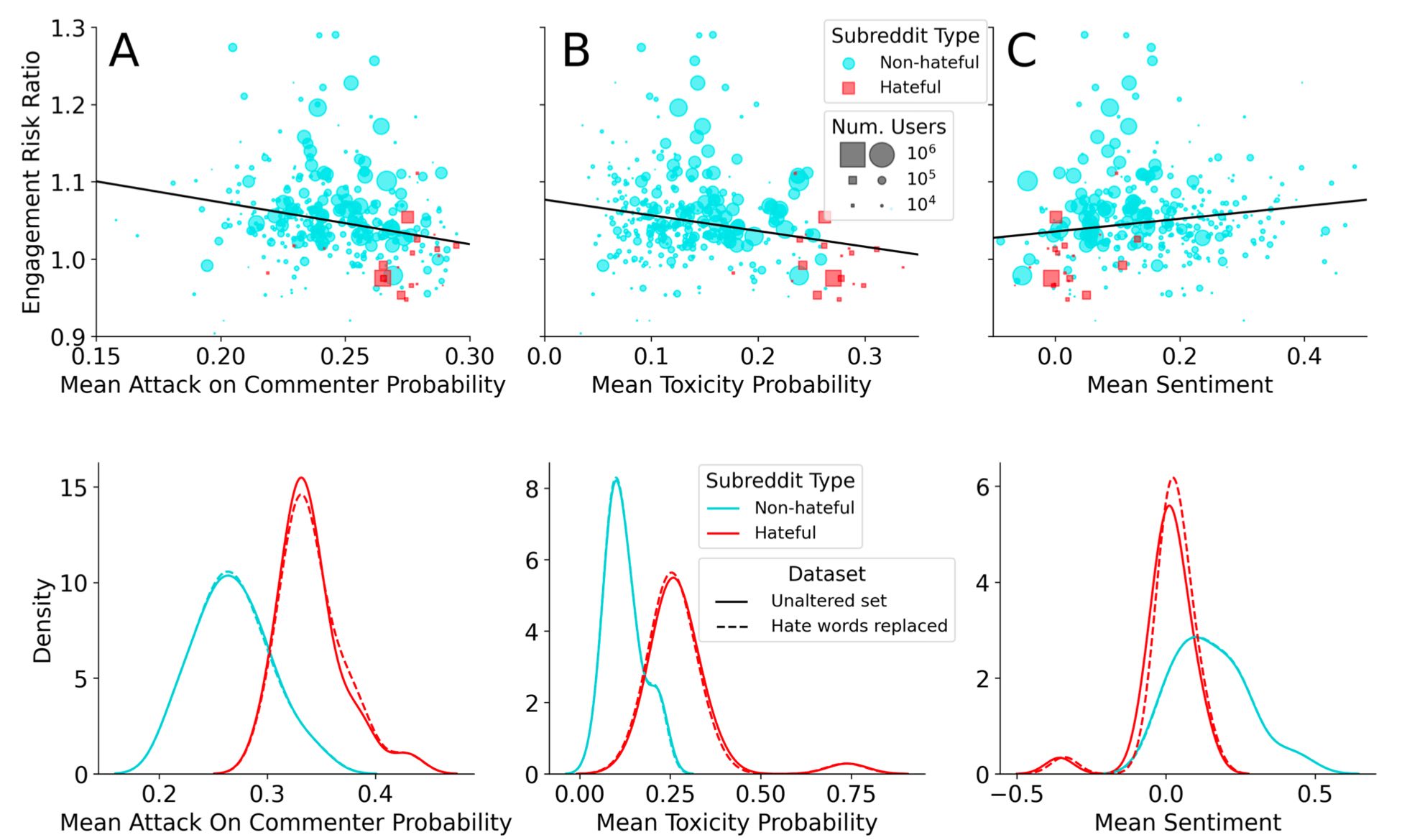

The likelihood replies increase activity are negatively correlated with attacks and toxicity, and positively correlated with sentiment.

Attacks and toxicity are higher in hateful subreddits replies, while sentiment is lower.

Top row: relationships between engagement risk ratios and mean attack on commenter probability (A), toxicity (B), and sentiment (C) of subreddits.

Bottom row: distributions across all users for (A) attack on commenter probability, (B) toxicity probability, and (C) valence for replies in hateful and non-hateful subreddits.

substitution of synonymous non-hate terms does not alter ability of the models to distinguish between subreddit type.

The likelihood replies increase activity are negatively correlated with attacks and toxicity, and positively correlated with sentiment.

Attacks and toxicity are higher in hateful subreddits replies, while sentiment is lower.

Top row: relationships between engagement risk ratios and mean attack on commenter probability (A), toxicity (B), and sentiment (C) of subreddits.

Bottom row: distributions across all users for (A) attack on commenter probability, (B) toxicity probability, and (C) valence for replies in hateful and non-hateful subreddits.

substitution of synonymous non-hate terms does not alter ability of the models to distinguish between subreddit type.

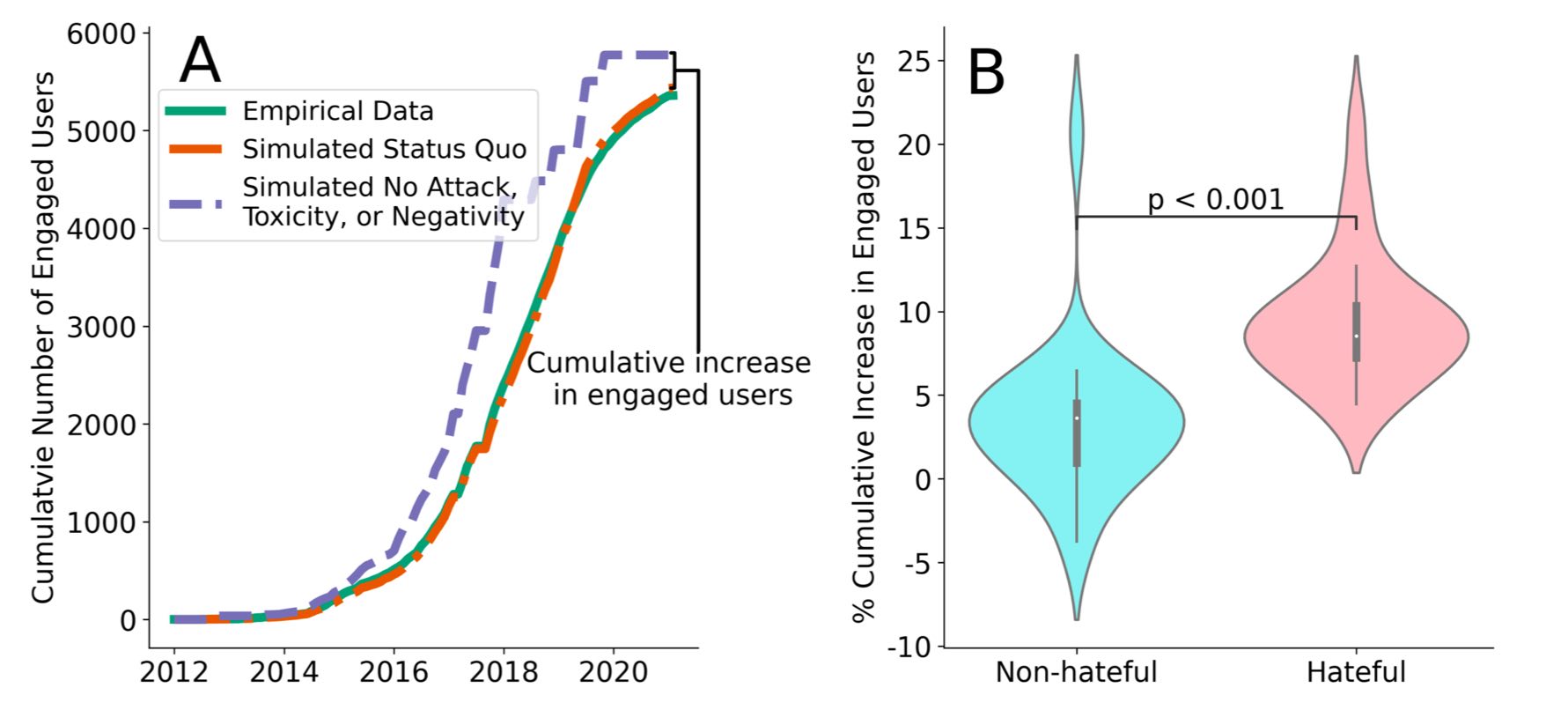

Simulated growth of subreddits. (A) Empirical and simulated growth curve of engaged users in r/MGTOW. (B) Predicted increases in engaged users for hateful and non-hateful subreddits given attack on commenter probability, toxicity, and sentiment of negative replies are set to zero. The White dots represent medians, while the thick gray bars represent the inter-quartile range and the thin gray lines represent 95\% quantiles. These results indicate that hateful subreddits grow more slowly than they potentially could due to the nature of their replies, hence hateful subreddits are counter-productive to their own growth because their hate is undirected.

Simulated growth of subreddits. (A) Empirical and simulated growth curve of engaged users in r/MGTOW. (B) Predicted increases in engaged users for hateful and non-hateful subreddits given attack on commenter probability, toxicity, and sentiment of negative replies are set to zero. The White dots represent medians, while the thick gray bars represent the inter-quartile range and the thin gray lines represent 95\% quantiles. These results indicate that hateful subreddits grow more slowly than they potentially could due to the nature of their replies, hence hateful subreddits are counter-productive to their own growth because their hate is undirected.